Little Universe

Projection Mapping, Physical Computing, Blob Tracking

Credits:

In collaboration with SHI Weili & Miri Park.

Exhibitions:

Submitted to ARS ELECTRONICA 2016.

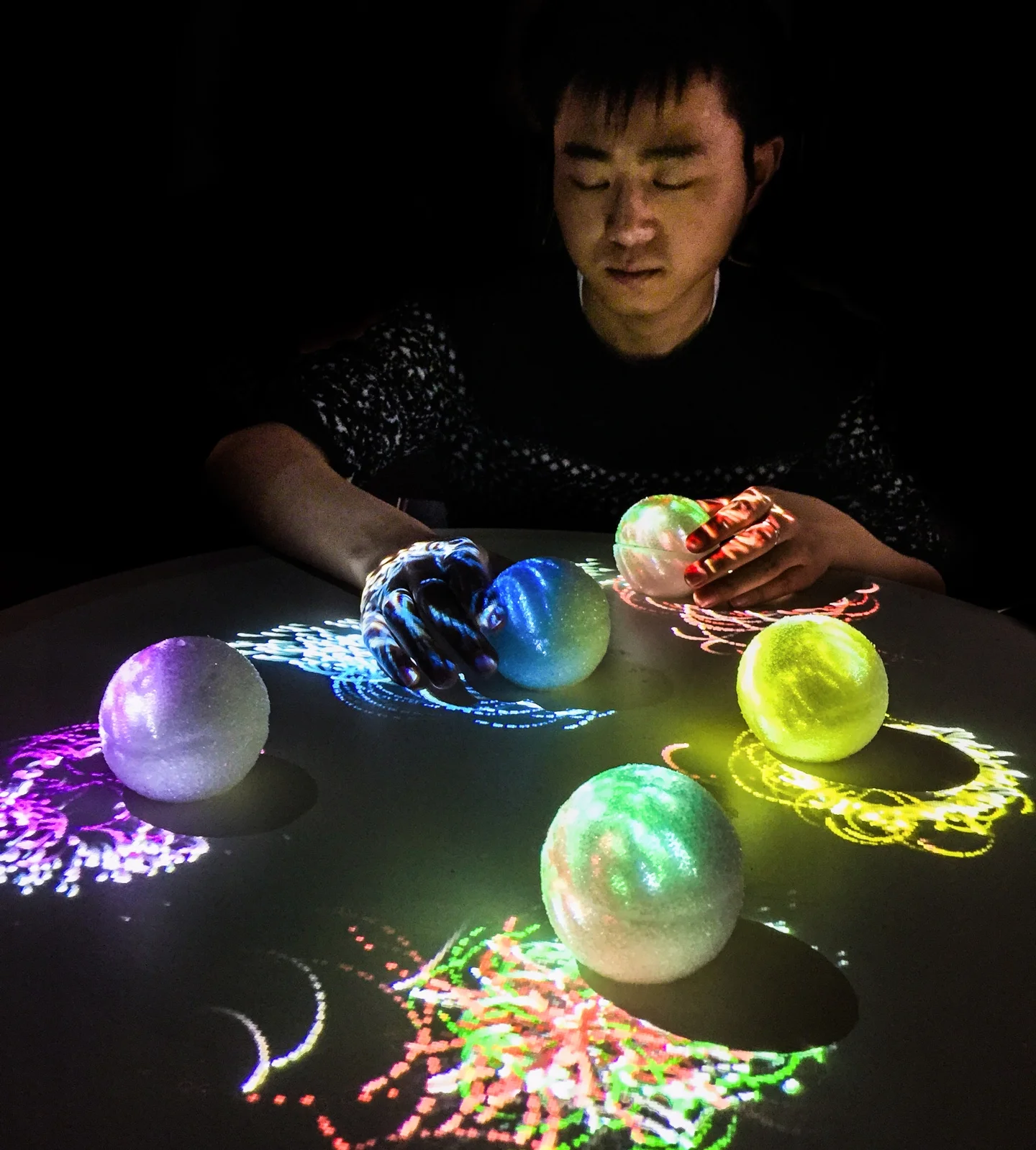

Little Universe is an interactive installation of poetic objects revolving around themselves, whispering to each other, no matter whether or not they are visited by you—their exotic guest. Colorful the objects are, and they are not mindless clouds of stardust. Each object has its own temperament— a tendency to get close to someone, and to run away from another. In this way, the Little Universe reveals its rich dynamics.

This interactive installation is designed to bring about a serene and meditative atmosphere. It has an intimate physical form, inviting the audience to interact with it, to touch and move it with their hands. Without interfering with the dialogue between the objects, the audience can still enjoy the poetic scene by watching, listening and contemplating their behaviors and relationships.

While the audience is not explicitly informed of the logic behind the behaviors, the five-element theory (五行) of Chinese philosophy inspires the distinct characteristics and relationships between the objects. The colors of the five objects represent the five elements—metal (金), wood (木), water (水), fire (火), and earth (土). There are two orders of presentation within the five-element system: in the order of mutual generation (相生), one element can give birth to another, while in the order of mutual overcoming (相克), the element can suppress yet another. Represented by the attracting and repelling forces between the particle systems surrounding the objects, the relationships between the poetic objects enrich the contemplative intricacy of the Little Universe.

Technology

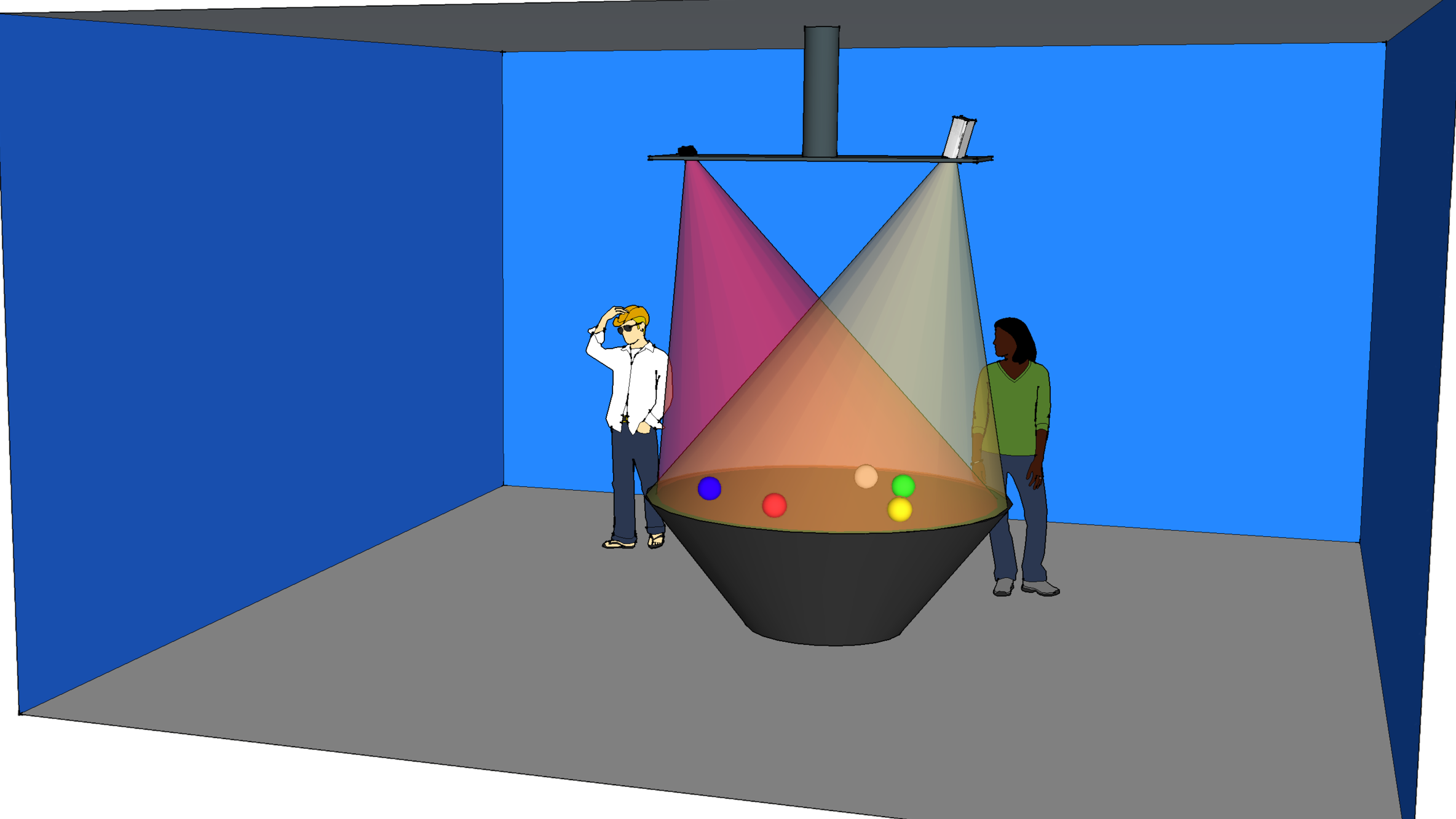

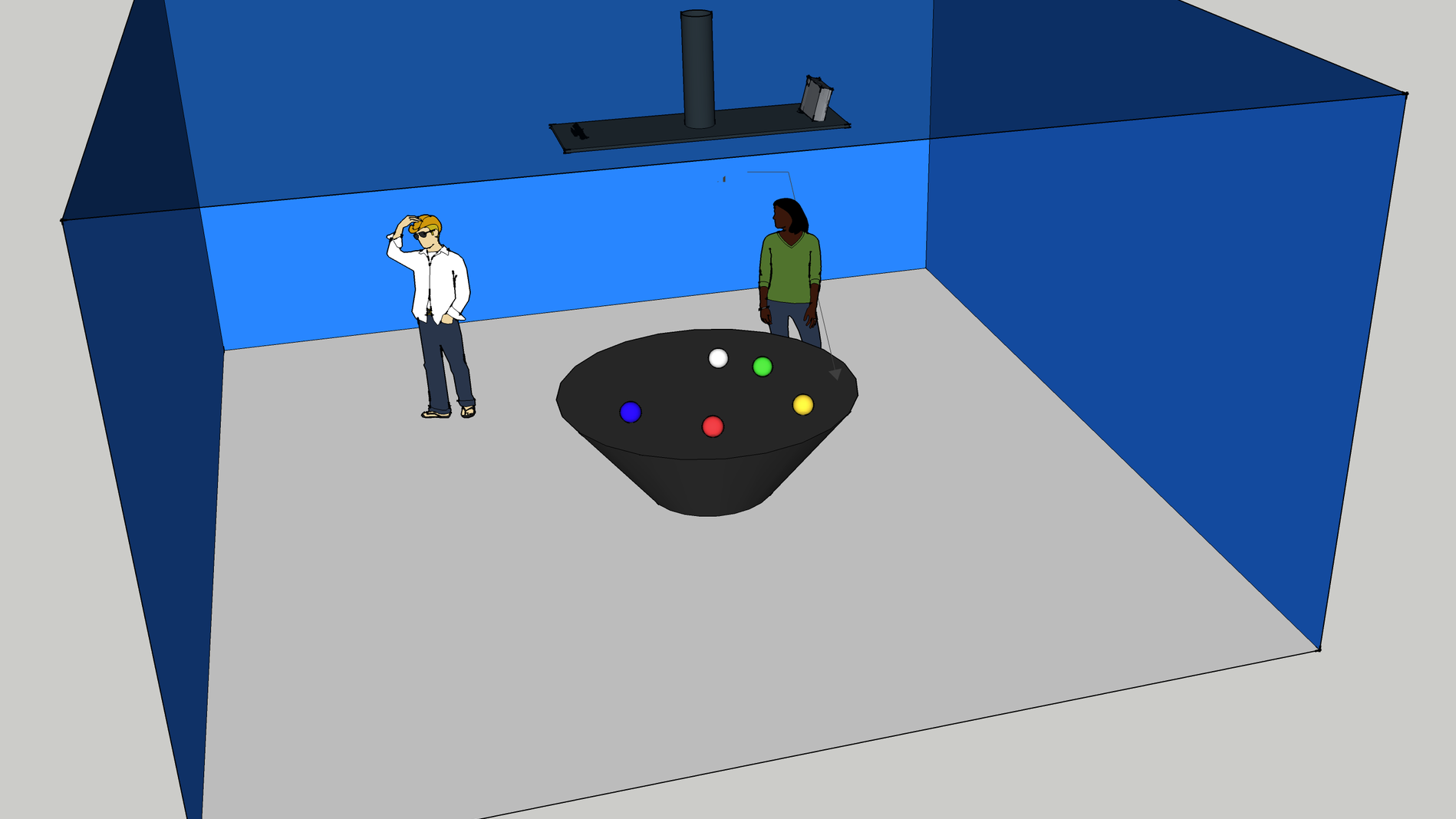

The Little Universe installation is built to integrate four components:

- poetic objects with which the users interact,

- projection system,

- sound generation system, and

- position detection/tracking system.

The poetic objects are made with foam balls implanted with micro-controllers and infrared LEDs facing upwards, atop a flat surface as the cosmic background. The infrared light from the objects is captured by a Microsoft Kinect motion sensor, and analyzed by an openFrameworks application in order to locate the objects. A particle system is projected onto the objects, and based on the position of the objects and their relationships, the behaviors of the particle systems are updated and rendered by the openFrameworks application. The image is then processed by MadMapper and sent to the projector, which has been calibrated so that the projection will be aligned with the physical objects. Control messages are sent from the openFrameworks application to Ableton Live via the Open Sound Control (OSC) protocol, generating the ambient music and sound effects.

The position detection system precisely locates the objects on the table and tracks them throughout the installation. The projection on each object behaves with distinct characteristics depending on the viewer’s interaction, which requires the system to be able to distinguish the objects from each other. Thus, the application has to be always aware of the exact location of the individual objects.

The tracking system comprises of camera vision alongside infrared LEDs. Utilizing blob detection on Kinect’s infrared stream, the application will precisely locate the objects according to their implanted infrared LEDs. A signal processing procedure enables the system to distinguish the objects within 300 ms post detection.

Kinect’s infrared stream can afford up to 30 FPS, which translates to a 30 Hz sampling rate. Hence, theoretically, we can reconstruct the any input signals with a bandwidth less than 15 Hz. The infrared LEDs on the objects are constantly blinking, which generate patterns that are perceived as square wave signals by the camera vision system. All the LEDs in the objects generate periodic square waves with a period equal to one third of a second. However, each LED generates a signal with a distinct duty cycle. The application tells the objects apart by measuring the duty cycles of the LEDs through the Kinect’s data frames. After detection, the application starts tracking the objects, and in the event of tracking loss or confusion between objects, a precise identification will be performed again through frequency analysis.